The Engine Room of Digital Worlds

The breathtaking visual landscapes and fluid animations that define contemporary video games are not mere happy accidents; they are the product of sophisticated technological advancements. From the intricate dance of light and shadow rendered by powerful graphics engines to the intelligent decision-making of in-game artificial intelligence, a complex ecosystem of technologies works in concert to bring virtual worlds to life. This section explores the foundational pillars that support the evolving visual culture of gaming, revealing how developers harness these tools to create experiences that captivate and enthrall players worldwide.

Understanding these technologies is key to appreciating the artistry and engineering prowess involved in game development. It's a dynamic field where innovation is constant, with researchers and developers perpetually pushing the boundaries of what's possible. We'll examine the core components that drive visual fidelity, how artificial intelligence is revolutionizing game design, and the emerging frontiers that promise to redefine our interaction with digital realities. This journey will illuminate the intricate interplay between art and science that shapes the games we play and love.

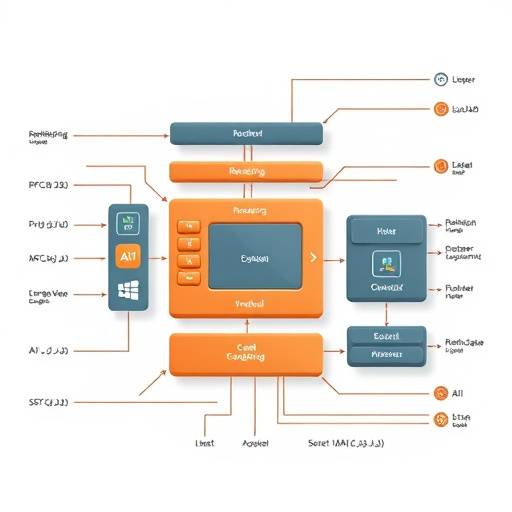

Rendering Engines & Graphics Pipelines

Explore the heart of visual output: the rendering engines and the complex stages of their graphics pipelines.

Learn More

Artificial Intelligence in Game Development

Discover how AI is used to create intelligent NPCs, dynamic game worlds, and sophisticated player experiences.

Learn MoreProcedural Content Generation (PCG)

Uncover the power of algorithms to create vast, unique, and ever-changing game environments and assets.

Learn MoreRendering Engines and Graphics Pipelines

At the core of any visually impressive game lies its rendering engine – the software framework responsible for translating game data into the images players see on their screens. Modern game engines like Unreal Engine, Unity, and Godot are incredibly powerful and versatile, offering a suite of tools for developers to build complex visual experiences. These engines manage everything from 3D model rendering and texture application to lighting, shadows, and post-processing effects.

The journey from raw 3D model data to a final rendered frame involves a sophisticated **graphics pipeline**. This pipeline is a series of stages, each performing specific operations to construct the image. Key stages include:

- Vertex Processing: Transforms 3D object vertices from model space to screen space, handling scaling, rotation, and translation.

- Rasterization: Converts geometric primitives (like triangles) into pixels on the screen.

- Pixel (Fragment) Shading: Determines the color of each pixel, calculating lighting, texture sampling, and material properties. This is where much of the visual magic happens, using complex shaders to simulate realistic surfaces.

- Depth and Stencil Testing: Ensures that objects are rendered in the correct order (e.g., closer objects obscure farther ones) and allows for complex rendering effects.

- Post-Processing: A final stage that applies effects to the entire rendered image, such as bloom, depth of field, motion blur, and color correction, to enhance realism and artistic style.

Technologies like **Physically Based Rendering (PBR)** have become standard. PBR aims to simulate how light interacts with surfaces in the real world, leading to more believable materials – from the rough texture of stone to the reflective sheen of polished metal. Furthermore, advancements in **real-time ray tracing** allow for incredibly accurate reflections, refractions, and global illumination, creating lighting that is dynamic and visually stunning, mimicking how light bounces in the physical environment.

The efficiency of these pipelines is crucial. Developers constantly optimize by using techniques such as Level of Detail (LOD), occlusion culling, and efficient shader programming to ensure smooth frame rates even with highly detailed visuals. The interplay between hardware (GPUs) and software (rendering engines) continues to drive progress, enabling games to achieve photorealism and elaborate artistic visions.

| Technology | Description | Visual Impact | Examples |

|---|---|---|---|

| Physically Based Rendering (PBR) | Simulates real-world light-material interactions. | Highly realistic textures and material properties (metal, wood, fabric). | Unreal Engine 5, Unity HDRP, modern AAA titles. |

| Real-time Ray Tracing | Accurately simulates light rays for reflections, shadows, and illumination. | Cinematic-quality lighting, realistic reflections on surfaces. | Cyberpunk 2077 , Control , Metro Exodus Enhanced Edition . |

| High Dynamic Range (HDR) | Expands the range of luminance, providing brighter highlights and deeper shadows. | More vibrant colors, greater contrast, and a more lifelike image. | Modern display technologies, many current-generation games. |

| Anti-Aliasing Techniques (TAA, DLSS, FSR) | Reduces jagged edges and pixelation on geometric shapes. | Smoother, cleaner visuals, especially on diagonal lines and edges. | Ubiquitous across PC and console gaming. |

"The pursuit of graphical fidelity is a continuous quest. Our goal is to leverage these powerful tools to create worlds that not only look believable but also evoke genuine emotion and wonder in the player."

AI in Game Development: Bringing Worlds to Life

Artificial Intelligence (AI) is no longer just about enemy pathfinding; it's a transformative force that shapes nearly every aspect of modern game development, from character behavior to content creation. The sophisticated AI systems employed today allow for dynamic, responsive, and increasingly believable game worlds.

Character Behavior: At its most fundamental level, AI dictates how non-player characters (NPCs) interact with the game world and the player. Modern AI goes far beyond simple scripted actions. Techniques such as Behavior Trees, Finite State Machines, and utility-based AI allow NPCs to exhibit complex behaviors: reacting intelligently to threats, collaborating with allies, exhibiting unique personalities, and adapting their strategies based on the player's actions. This creates more dynamic and challenging gameplay, making enemies feel less like predictable obstacles and more like intelligent adversaries.

Dynamic Storytelling and Dialogue: AI is beginning to power more nuanced narrative experiences. Advanced dialogue systems can generate contextually relevant conversations, making NPCs feel more alive and reactive. Furthermore, AI can dynamically adjust story elements, side quests, or difficulty based on player progression and choices, leading to personalized and replayable narratives.

Machine Learning and Deep Learning: These advanced AI fields are increasingly being integrated. Machine learning can be used for:

- NPC Training: AI agents can learn and adapt by playing against themselves or human players, refining their strategies to become formidable opponents.

- Content Generation: AI can assist in creating assets, animations, or even entire levels, accelerating the development process and introducing novel elements.

- Player Behavior Analysis: AI can analyze player patterns to offer personalized experiences, suggest relevant content, or dynamically adjust difficulty to maintain engagement.

- Procedural Animation: AI can generate more natural and responsive character animations in real-time, based on context and physics, rather than relying solely on pre-made motion capture sequences.

The ethical considerations and ongoing research into AI safety and fairness are also critical as these systems become more powerful. The goal is to create AI that enhances the player's experience, fosters emergent gameplay, and contributes to truly living, breathing game worlds.

| AI Domain | Key Applications | Impact on Visual Culture | Notable Examples |

|---|---|---|---|

| Behavioral AI | NPC decision-making, pathfinding, combat tactics, social simulation. | More believable characters, emergent gameplay, challenging adversaries. | Grand Theft Auto V (pedestrian and traffic AI), Alien: Isolation (single, adaptive antagonist). |

| Machine Learning (ML) | NPC adaptation, player behavior analysis, content generation assistance. | Dynamic challenges, personalized experiences, novel game elements. | AI opponents in fighting games (e.g., Mortal Kombat ), AI-assisted asset creation tools. |

| Natural Language Processing (NLP) | Dynamic dialogue generation, understanding player input. | More engaging and responsive NPC interactions. | Early implementations in RPGs and adventure games, future potential for advanced conversational agents. |

| Procedural Animation | Generating fluid and context-aware character movements. | More naturalistic character movement and reaction. | Character locomotion systems in many modern engines. |

Procedural Content Generation (PCG)

Procedural Content Generation (PCG) is a powerful technique that uses algorithms to create game assets and content, rather than relying solely on manual creation. This method allows developers to generate vast, unique, and complex game worlds, levels, items, and even narratives with a fraction of the human effort required for traditional asset creation.

The core principle of PCG is the use of algorithms, often combined with random seeds, to produce consistent yet varied outputs. This is invaluable for:

- World Building: Creating expansive open worlds with diverse landscapes, biomes, and geological features. Think of procedurally generated planets in space exploration games or endless terrains in survival titles.

- Level Design: Generating unique dungeons, mazes, or combat arenas for replayability. Each playthrough can offer a fresh layout, keeping players engaged.

- Item and Equipment Generation: Crafting a virtually endless supply of weapons, armor, and accessories with varying stats, appearances, and effects, common in loot-driven RPGs.

- Environmental Details: Populating worlds with procedurally generated vegetation, rocks, and small details that add to the sense of scale and realism.

- Narrative and Quest Generation: While more complex, AI-driven PCG can assist in creating dynamic quest lines and narrative branches tailored to player actions.

The benefits of PCG are numerous. It significantly reduces development time and cost for large-scale content. It offers unparalleled replayability as players can experience unique content each time they play. It can also lead to emergent gameplay possibilities, where players discover unexpected combinations and scenarios within the generated content. Games like No Man's Sky , with its quintillions of unique planets, or Minecraft , with its procedurally generated terrain, are prime examples of PCG's expansive potential.

However, PCG is not without its challenges. Ensuring that generated content is consistently high-quality, aesthetically pleasing, and mechanically sound requires careful algorithm design and extensive testing. The art lies in balancing randomness with deterministic rules that ensure a coherent and enjoyable player experience. The fusion of art direction and algorithmic generation is crucial for creating worlds that feel both vast and handcrafted.

Immersive Technologies: VR and AR

Virtual Reality (VR) and Augmented Reality (AR) represent the bleeding edge of interactive experiences, offering entirely new ways to engage with digital content. These technologies are not just about enhancing visuals; they fundamentally alter player immersion and interaction.

Virtual Reality (VR): VR aims to transport players into a completely digital environment. This is achieved through a headset that displays stereoscopic images, tricking the brain into perceiving depth, and typically includes head-tracking sensors that allow the player's view to change as they move their head. VR controllers provide hand-tracking capabilities, enabling players to interact with the virtual world in a direct, intuitive manner – reaching out to grab objects, swing weapons, or manipulate interfaces with their own hands. The visual fidelity and responsiveness of VR are paramount, as any disconnect between physical movement and visual feedback can lead to motion sickness. Advanced VR development focuses on high refresh rates, low latency, and detailed graphical rendering to create a convincing sense of presence. Games like Half-Life: Alyx have set new benchmarks for what is possible in VR, showcasing stunning visuals and deeply interactive gameplay.

Augmented Reality (AR): AR overlays digital information and graphics onto the real world, typically viewed through a smartphone screen, tablet, or specialized AR glasses. Unlike VR, AR does not aim to replace reality but to enhance it. This allows for unique gameplay experiences where virtual characters can appear in your living room, or digital interfaces can interact with your physical surroundings. The visual challenges in AR involve seamlessly blending digital objects with the real environment, ensuring accurate lighting and perspective, and managing performance on mobile devices. While still evolving, AR holds immense potential for casual gaming, educational applications, and innovative new forms of interactive entertainment that bridge the gap between our physical and digital lives.

Virtual Reality Immersion

Discover how VR headsets and controllers create a profound sense of presence and direct interaction.

Explore VR

Augmented Reality Experiences

Learn how AR blends digital elements with our physical world for innovative gameplay.

Explore AR

The Future of Immersion

Consider the convergence of VR, AR, and other technologies shaping the next generation of interactive entertainment.

Future Concepts

Empowering Accessibility Through Technology

The same technologies that drive visual innovation also play a crucial role in making games more accessible to a wider audience. From advanced rendering that can be scaled for performance to AI-driven assistance features, technology is breaking down barriers.

Read more about how these advancements are being utilized to ensure everyone can enjoy the rich visual experiences gaming offers in our Accessibility section.